Following our Elastigroup & Nomad Integration, we now support autoscaling for Nomad users.

Scaling Nomad nodes has always been difficult and autoscaling was the first request we got from Nomad users after our announcement. The Spotinst Nomad Autoscaler is our own proprietary solution, allowing our customers to scale Nomad workloads economically based on Spot Instance prices and aggregated node utilization. And it’s absolutely free.

How the Nomad Autoscaler works

Our Nomad Autoscaler periodically checks for Jobs with queued allocations, ensuring there is enough capacity to schedule these allocations. Whenever there isn’t enough capacity, a scale-up event will be triggered.

Nomad Autoscaler also monitors the usage of all nodes to automatically terminate unneeded or underutilized nodes. If a node is not needed for an extended period and is not highly utilized, the Jobs will be scheduled elsewhere and the node will be terminated.

Container scaling based on “Headroom” & “Tetris Scaling”

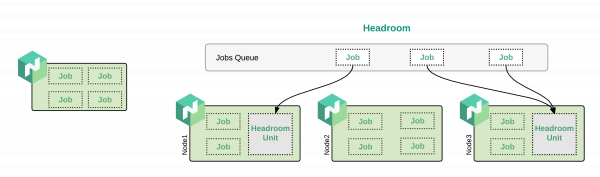

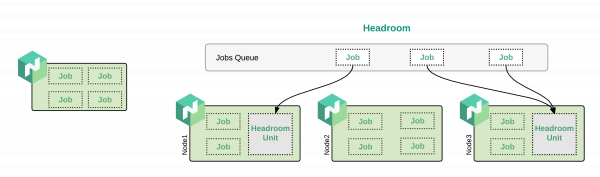

Headroom

Headroom is a buffer of spare capacity (in terms of both memory and CPU), ensuring that when you need to scale more jobs, you don’t have to wait for new instances. While also preventing instances from being over-utilized.

Each headroom unit consists of 2 definitions: one for CPU “cpuPerUnit” (in MHz) and one for Memory “memoryPerUnit” (in MiB). In addition, a number of headroom units to reserve in the cluster can be defined.

For example, let’s say that we define the headroom unit to consist of 512 MiB of Memory, 1000 MHz, and require a total of 10 units. On top of that, let’s assume that the cluster consists of 3 instances. The AutoScaler will verify the total sum of units throughout the entire cluster and check if it meets the required number configured in the group.

Let’s take an example where the first instance has 2 whole free headroom units (in our example it means at least 1024 MiB and 2000 MHz), the second instance has 3 whole units, and the third has 5 whole units. In this case, the cluster has a total of 10 free headroom units, as configured in the group, and no scale-up will be performed. To see when scale-up will occur, let’s look at a different case. In this case, let’s say the first instance has 2 free units, the second instance has 3 free units, and the third will have only 4 free units. In this new case, the cluster will have a total of 9 free units. As the group requires 10 free units, a scale up activity will be triggered automatically.

NOTE: Headroom takes precedence over scaling down operations.

Tetris Scaling

When a job is launched in Nomad, the Nomad master scheduler tries to find available capacity for the job to run. In some cases, there aren’t enough resources to meet the job’s demands.

In such a case, the job will have queued allocations and the job metrics will show that the job can’t run due to exhausted nodes.

Some of the reasons for node exhaustion are:

- Dimension “cpu exhausted” exhausted on 1 node

- Dimension “memory exhausted” exhausted on 1 node

- Dimension “network: reserved port collision” exhausted on 1 node

- No nodes were eligible for evaluation

The Nomad Autoscaler automatically detects those states and launch additional instances when required.

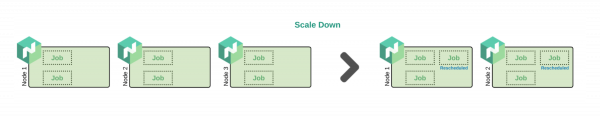

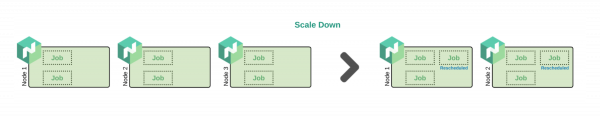

Scaling Down

Once the Nomad Autoscaler is enabled on a group, Elastigroup monitors the Nomad Cluster for idle instances. An instance is considered idle if it has less than 40% CPU and Memory utilization.

When an instance is found idle for the specified amount of consecutive periods, this triggers a scale down. Elastigroup will find spare capacity in other instances, drain the instance’s jobs, reschedule those jobs on other instances, and terminate the idle instance.

NOTE: Scale-Down actions are limited to 10% of the cluster size at a time.

Constraints Support

The Nomad Autoscaler supports job-level constraints. In your job configuration, you can define multiple constraints that will determine the nodes that are applicable to run this job.

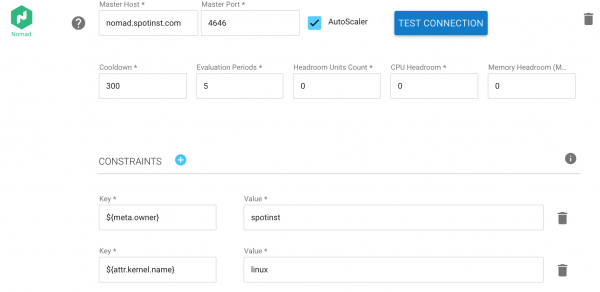

In order to support job constraints in your Nomad Autoscaler, you should create an Elastigroup for each combination of constraints and integrate it with your master host as shown below. Under your Nomad Autoscaler integration, you can add multiple constraints in a key-value definition (up to 30 constraints). The key format must be identical to the constraint definition in your job configuration. For example:

Let’s say that you want to run your jobs only on nodes that are marked as owned by ‘Spotinst’. In your job configuration in Nomad, it will look like this:

# Setup data dir

data_dir = "/tmp/client1"

# Enable the client

client {

enabled = true

servers = ["<NomadServerElasticIP>"]

If you require constraint support in your Nomad Autoscaler, make sure you create an Elastigroup for each of your constraints combinations! Each group will only be responsible for auto-scaling the nodes relevant for this constraint combination. If you have other jobs without constraints, make sure to create an Elastigroup without constraints which will be responsible for auto-scaling these jobs without constraints. In short, you must create a unique Elastigroup for each set of constraint combinations you may have, including one for those with 0 constraints.

Nomad Configuration: Getting Started

Please provide the Nomad server address (IP) and port and test the connection to the server with a valid Nomad API call.

NOTE: When Nomad AutoScaler is enabled, weighted scaling is disabled.

Console Configuration:

Set the fields in the Integration portion under the “Compute” tab of the Elastigroup creation wizard:

API Configuration:

You can add the following arguments to the Elastigroup configuration with an API call to update the Elastigroup – Update API while using the following body (make sure to adjust the IP and Host)

{

"group": {

"thirdPartiesIntegration": {

"nomad": {

"masterHost": "12.123.123.123",

"masterPort": 1234,

"autoScale": {

"isEnabled": true,

"cooldown": 300,

"headroom": {

"cpuPerUnit": 1000,

"memoryPerUnit": 2000,

"numOfUnits": 1

},

"down": {

"evaluationPeriods": 5

},

"constraints": [

{

"key":"${meta.myKey}",

"value": "myValue"

},

{

"key":"${attr.kernel.name}",

"value": "linux"

}

]

}

}

}

}

}

Using Spotinst Nomad Auto Scaler will help you manage your containers, making it even easier to utilize Spot Instances when running on Nomad. Get started with Elastigroup’s Nomad Autoscaler.