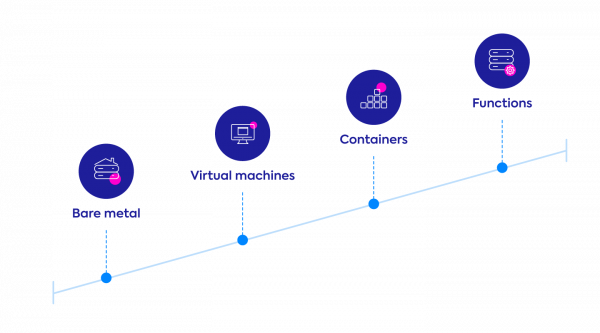

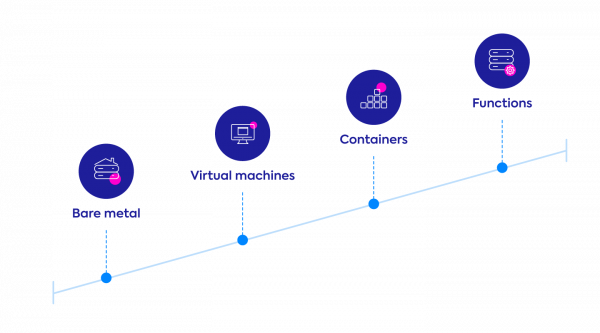

Over the past 15 years, application architectures have evolved dramatically, from bare metal servers to virtualization technology, to elastic compute, auto scaling and containers. Recently, we’ve seen another shift to the serverless paradigm, where even the operating system is abstracted from developers and ops teams. Customers want fully-managed compute, but still want to use their existing application development tools and pipelines.

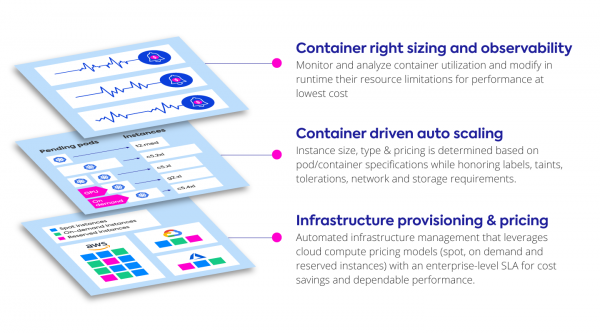

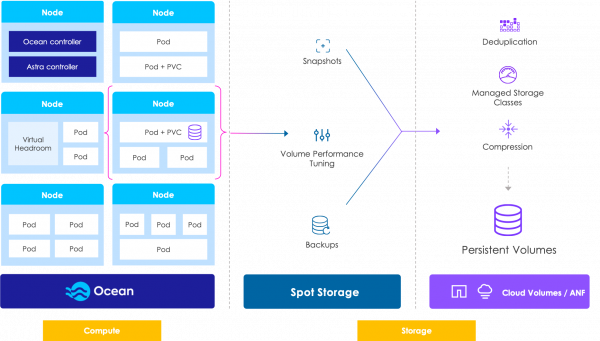

In the past two years, Spot has delivered the serverless experience of fully-managed compute for Kubernetes and container deployments with Spot Ocean. With core capabilities for intelligent provisioning, autoscaling, right-sizing and monitoring, Ocean puts the focus of compute infrastructure on meeting containers and application requirements. When applications need to scale, Ocean automatically provisions the most reliable and most efficient instances that meet container specifications. Ocean gives developers what they need to scale compute for their applications as much and as quickly as they require, without the operational headaches.

At Spot, we called it serverless containers, a term that wasn’t all that common when we started to use it, but it perfectly describes the hands-free experience we give our users. The concept quickly resonated with customers, who adopted Ocean faster than any other product we have ever developed and now, hundreds of customers are using Ocean today, from big enterprises to tech unicorns and small startups.

Ocean focuses on helping customers to solve complex ops challenges in multiple layers, from picking the right instance pricing model and server size, to autoshaping container resource requirements at runtime.

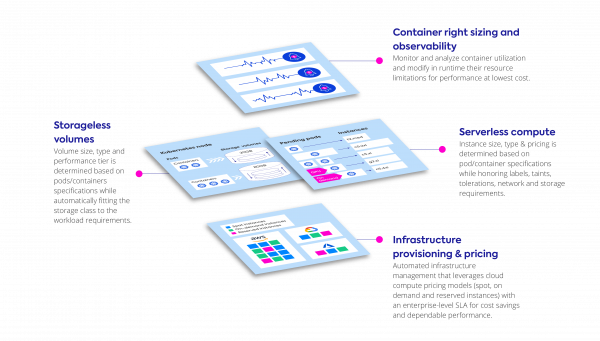

Now, we’re introducing the concept of storageless into the Ocean platform to eliminate the complexities and overhead of storage management. Just as your containers request varying types of compute resources (CPU, GPU, memory), clusters request storage resources, typically consumed as persistent volumes with different properties for size, shape and access modes.

With storageless volumes, much like with serverless computing, the allocation of these resources is dynamically managed based on how the application is actually consuming them, not on pre-purchased units. This approach enables developers and operators to focus on SLOs without the need to think about storage classes, performance, capacity planning or maintenance of the underlying storage backends.

With these capabilities, application owners can shift even more operational responsibilities to Ocean, while teams focus on their core applications instead of managing storage volumes and data. The promise of storageless infrastructure is that it allows teams to build and run applications without architecting the size and shape of persistent volumes, including throughput, maintenance and capacity provisioning.

When combined with Ocean’s serverless capabilities, storageless volumes give Kubernetes users everything required to run and scale applications with high availability and no need for user administration. With this application-driven approach, container requirements set the behavior or infrastructure while Ocean automatically provisions, right-sizes and optimizes both Kubernetes nodes and storage classes for the highest performance at the lowest possible cost. Driving this cost savings is advanced technologies for thin provisioning, deduping and compression, coupled with automatic volume shaping and sizing. Users define simple storage requirements which are maintained as storageless volumes, and when pods request storage, Ocean will deliver the right storage type (IOPS) with the right volume size (GiB) to the requesting pods, having been auto-shaped to match requirements.

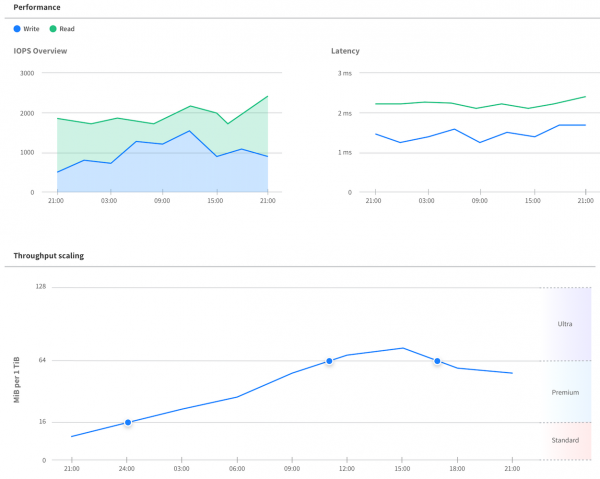

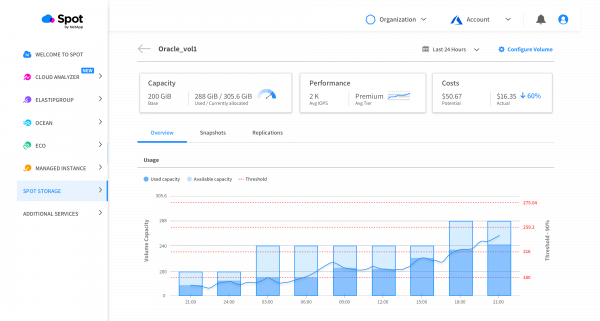

Within the Ocean console, users can manage their disk storage to create volumes, attach them to containers and enable dynamic sizing. For instance, to optimize performance and throughput, users will specify the max tier (IOPs) for their volumes, and Ocean will automatically update tiers based on current application needs, shifting tiers up or down as needed.

Volume capacity is also monitored closely, and Ocean gives users the ability to see capacity usage of specific volumes. Based on storage usage, Ocean will automatically increase or decrease the volume size.

These storageless features will give containerized applications the fully automated, dynamic infrastructure they need to scale and run. Storageless integration with Ocean is coming soon and will be available across AWS, GCP and Azure.

To learn more about storageless and Ocean connect with us.